Podman

Info

This method is supported by Plumber community.

This page describes how to set up a self-managed instance of Plumber using podman.

💻 Requirements

- GitLab instance version >=17.7

- The system requires a Linux server. It runs in 🕸 podman containers using a yaml configuration. Specifications:

- OS: Ubuntu or Debian

- Hardware

- CPU x86_64/amd64 with at least 2 cores

- 4 GB RAM

- 250 GB of storage for Plumber

- Network

- Users must be able to reach the Plumber server on TCP ports 80 and 443

- The Plumber server must be able to access internet

- The Plumber server must be able to communicate with GitLab instance

- The installation process requires write access to the DNS Zone to set up Plumber domain

- If the server is not reachable from internet or if you want to use your own certificate for HTTPS, you need to be able to generate certificate during the installation process for Plumber domain

- Installed software

unqualified-search-registries = ["docker.io"]🛠️ Installation

📥 Setup your environment

Clone the repository on your server

Terminal window git clone https://github.com/getplumber/platform.git plumber-platformcd plumber-platformCreate your configuration file

Terminal window cp .env.example .env📋 Configure Organization

In your

.envfile:If you want to connect Plumber to a specific GitLab group only: add the path of the group in

ORGANIZATIONvariable (to run the onboarding, you must be at least Maintainer in this group).env ORGANIZATION="<group-path>"If you want to connect Plumber to the whole GitLab instance: let the

ORGANIZATIONvariable empty (to run the onboarding, you must be a GitLab instance Admin).env ORGANIZATION=""

📄 Configure Domain name

Edit the

.envfile by updating value ofDOMAIN_NAMEandJOBS_GITLAB_URLvariables.env DOMAIN_NAME="<plumber_domain_name>"JOBS_GITLAB_URL="https://<url_of_your_gitlab_instance>"Example with domain name 'plumber.mydomain.com' for Plumber and 'gitlab.mydomain.com' for GitLab DOMAIN_NAME="plumber.mydomain.com"JOBS_GITLAB_URL="https://gitlab.mydomain.com"Create DNS record

- Name:

<plumber_domain_name> - Type:

A - Content:

<your-server-public-ip>

Info

A certificate will be auto-generated using Let’s encrypt at the application launch

- Name:

🦊 Configure GitLab OIDC

Plumber uses GitLab as an OAuth2 provider to authenticate users. Let’s see how to connect it to your GitLab instance.

Create an application

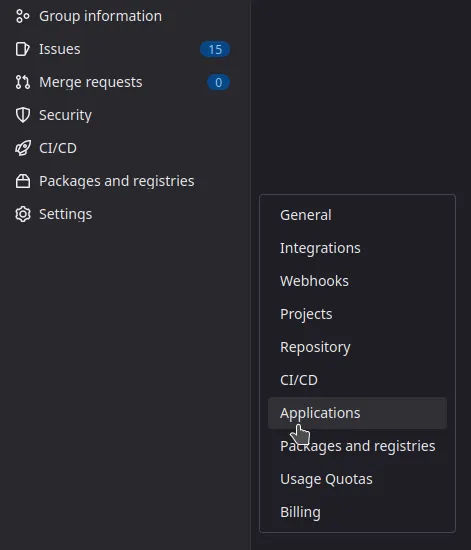

Choose a group on your GitLab instance to create an application. It can be any group. Open the chosen group in GitLab interface and navigate through

Settings > Applications:

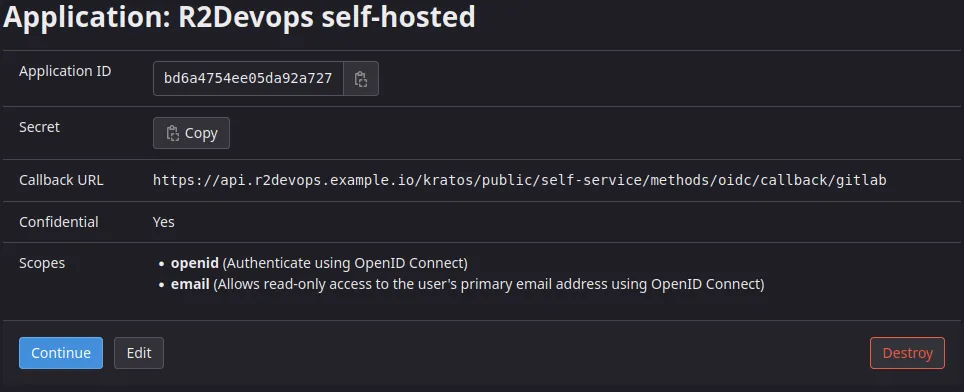

Then, create an application with the following information :

- Name:

Plumber self-managed - Redirect URI :

https://<plumber_domain_name>/api/auth/gitlab/callback - Confidential:

true(let the box checked) - Scopes:

api

Click on

Save Applicationand you should see the following screen:

Update the configuration

In

.envfile:Copy/paste the

Application IDand theSecretfrom the application you just created.env GITLAB_OAUTH2_CLIENT_ID="<application-id>"GITLAB_OAUTH2_CLIENT_SECRET="<application-secret>"- Name:

🔐 Generate secrets

Generate random secrets for all components:

Terminal window sed -i "s/REPLACE_ME_BY_SECRET_KEY/$(openssl rand -hex 32)/g" .envsed -i "s/REPLACE_ME_BY_JOBS_DB_PASSWORD/$(openssl rand -hex 16)/g" .envsed -i "s/REPLACE_ME_BY_JOBS_REDIS_PASSWORD/$(openssl rand -hex 16)/g" .env📋 (Optional) Add your custom CA

If your GitLab instance is using a TLS certificate signed with your own Certificate authority (CA):

- Add the CA certificate file in

podman ?

- Add the CA certificate file in

📄 Prepare podman for launch

Generate podman network:

Terminal window podman network create intranetGenerate podman socket

Terminal window systemctl --user start podman.socketsystemctl --user enable podman.socketIf you encounter this error Failed to connect to bus: No medium found use these commands with your user as sudoer:

Terminal window sudo loginctl enable-linger <your_local_user>sudo systemctl --user -M <your_local_user>@ start podman.socketsudo systemctl --user -M <your_local_user>@ enable podman.socketGenerate podman config files:

Terminal window set -a; source .env; set +aexport uid=$(id -u)envsubst < podman.yml.example > podman.ymlenvsubst < configmap.yml.example > configmap.ymlAllow port 80 and above in system for local user:

- Add this line to /etc/sysctl.conf file as sudo user or root:net.ipv4.ip_unprivileged_port_start=80

- Restart sysctl

Terminal window sudo systemctl restart systemd-sysctl

- Add this line to /etc/sysctl.conf file as sudo user or root:

🚀 Launch the application

Tip

You have successfully installed Plumber on your server 🎉

Now you can launch the application and ensure everything works as expected.

Run the following command to start the system:

Terminal window podman play kube podman.yml --configmap configmap.yml --network intranetInfo

If you need to reconfigure some files and relaunch the application, after your updates you can simply run the command again to do so.

Terminal window podman play kube podman.yml --replace --configmap configmap.yml --network intranet

⏫ Update

Follow these steps to update your self-managed instance to a new version:

Navigate to the location of your

platformgit repositoryUpdate it

Terminal window git pullOpen the

.env.examplefile and copy the values ofFRONTEND_IMAGE_TAGandBACKEND_IMAGE_TAGvariablesEdit the

.envfile by updating values ofFRONTEND_IMAGE_TAGandBACKEND_IMAGE_TAGvariables with the values previously copied.env FRONTEND_IMAGE_TAG="<new frontend version>"BACKEND_IMAGE_TAG="<new backend version>"Restart your containers

Terminal window set -a; source .env; set +aexport uid=$(id -u)envsubst < podman.local.yml.example > podman.ymlenvsubst < configmap.local.yml.example > configmap.ymlYou have successfully updated Plumber on your server 🎉

🔄 Backup and restore

Data required to fully backup and restore a Plumber system are the following:

- Configuration file:

.env - Databases:

- PostgreSQL database of Jobs service

- Files data:

- File storing data about certificate for Traefik service

All these data can be easily backup and restored using 2 scripts from the installation git repository:

scripts/backup_podman.shscripts/restore_podman.sh

💽 Backup

To backup the system, go to your installation git repository and run the following command:

./scripts/backup_podman.sh 13The script will create a backups directory and create a backup archive inside it prefixed with the date (backup_plumber-$DATE)

Info

You can use a cron job to perform regular backups. Here is a cron job that launch a backup every day at 2am:

0 2 * * * /plumber-platform/scripts/backup_podman.sh 13It can be added to your crontab with the command crontab -e. Check more information about cron jobs here.

🛳️ Restore

To restore a backup from scratch on a new system, follow this process:

Be sure that your new system is compliant with requirements

Copy the backup file on your new server

Clone the installation repository

Terminal window git clone https://github.com/getplumber/platform.git plumber-platformcd plumber-platformIf the IP address of your server changed from your previous installation, update your DNS records. See section 2 of domain configuration

Launch the restore script

Terminal window ./scripts/restore_podman.sh 13 <path_to_your_backup_file>

Danger

Did you encounter a problem during the restore process ? See the troubleshooting section.